Designing for Privacy in Digital Spaces

Discover how people feel about giving away their personalized data for algorithmic recommendation systems

Posted in

UX Research

Date

May 5, 2024

TLDR;

Personalization Paradox: There is a clear tension between the desire for personalized content and concerns over data security

Agency in Digital Spaces: Users feel the lack of control over their digital experiences

Transparency on Data Usage: Users are concerned about misuse of their data and seek transparency of how the data is utilized

User control: Users yearn for greater control over privacy and recommendation settings

Introduction

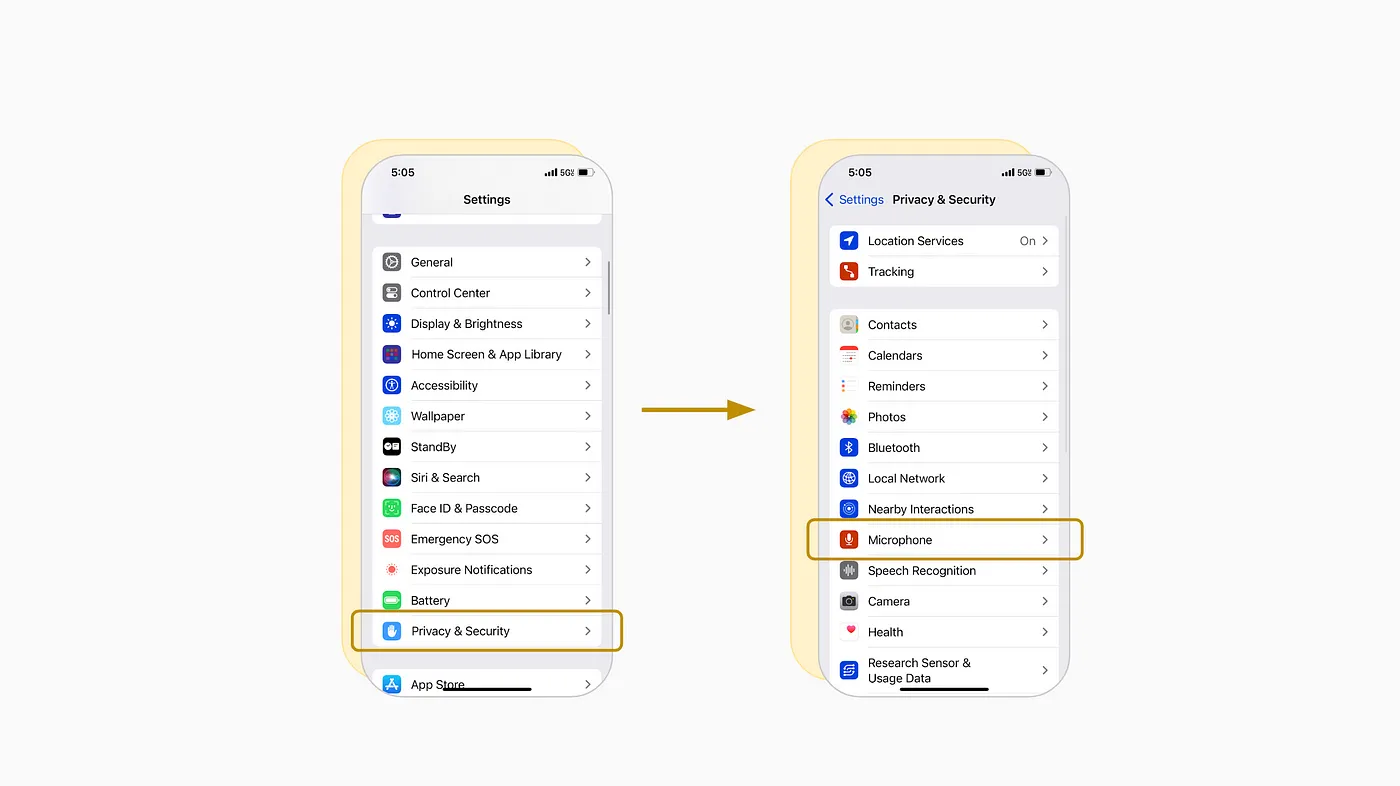

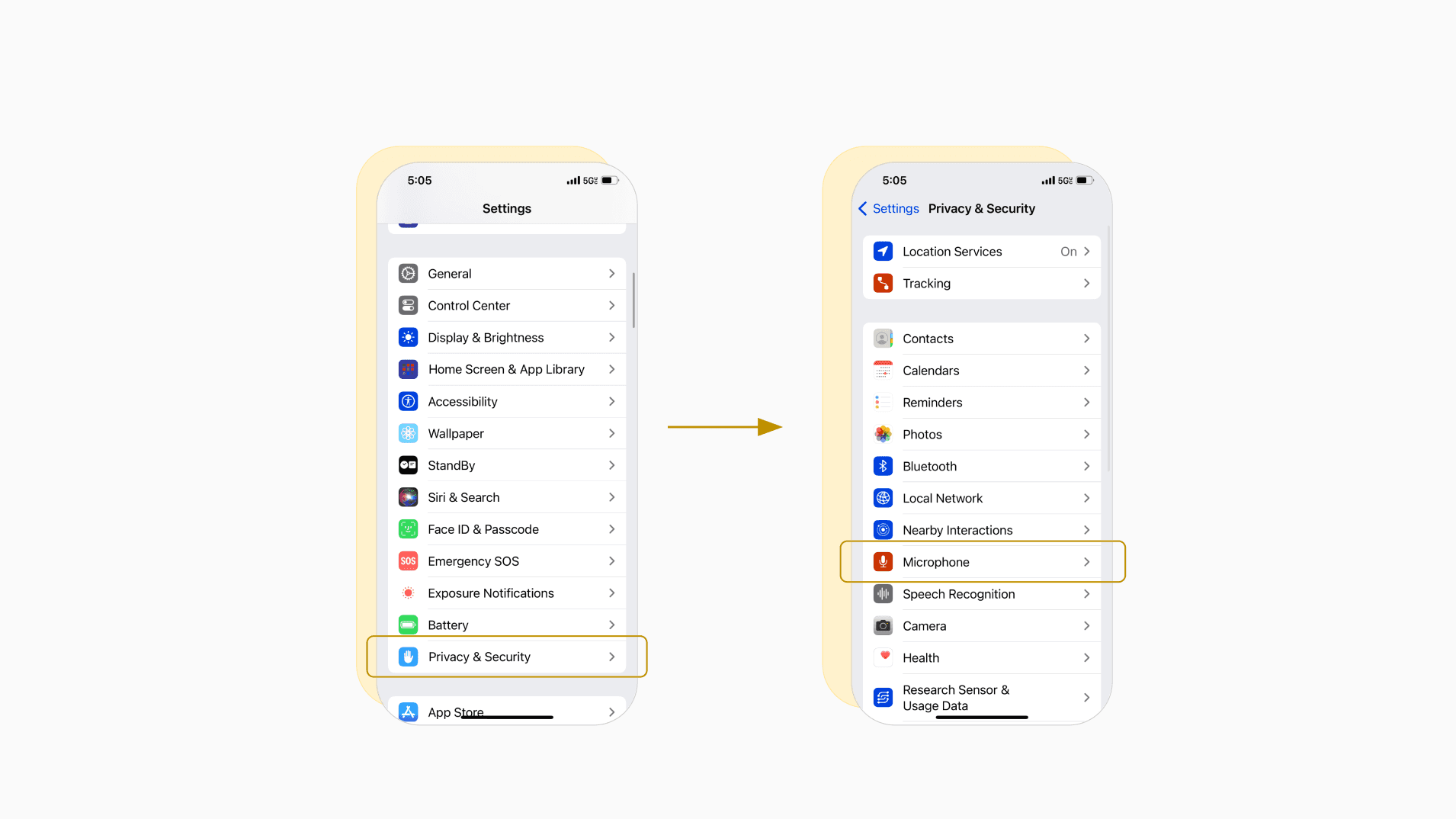

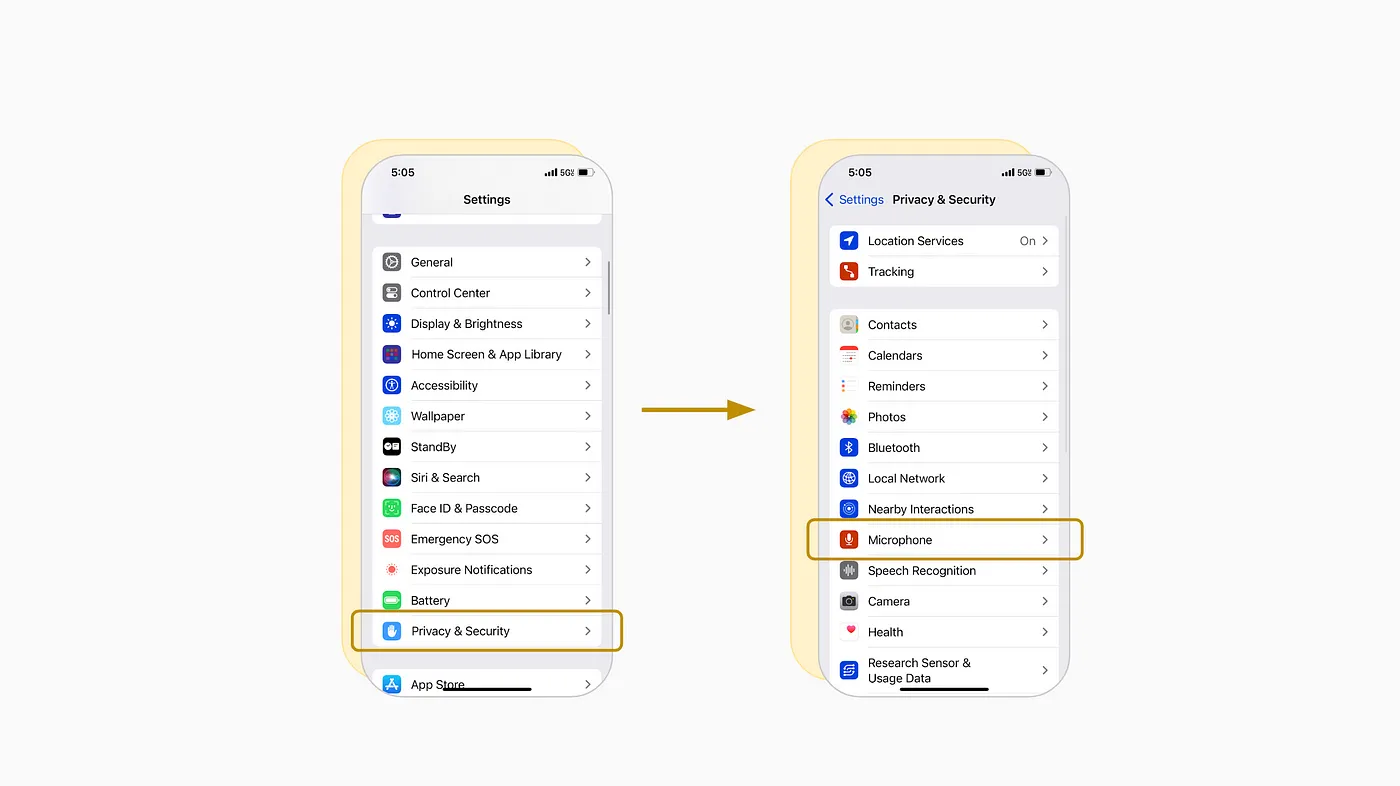

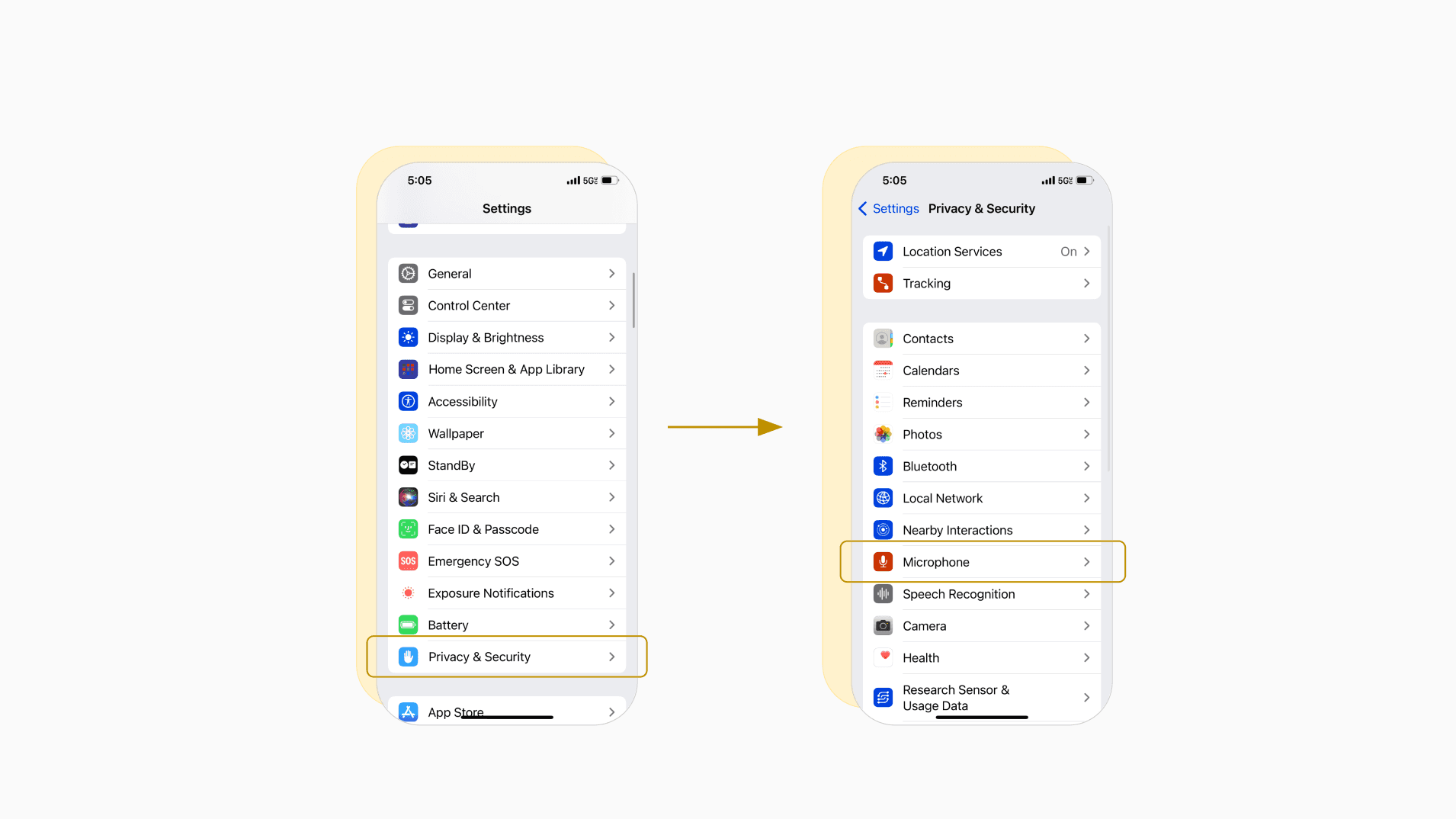

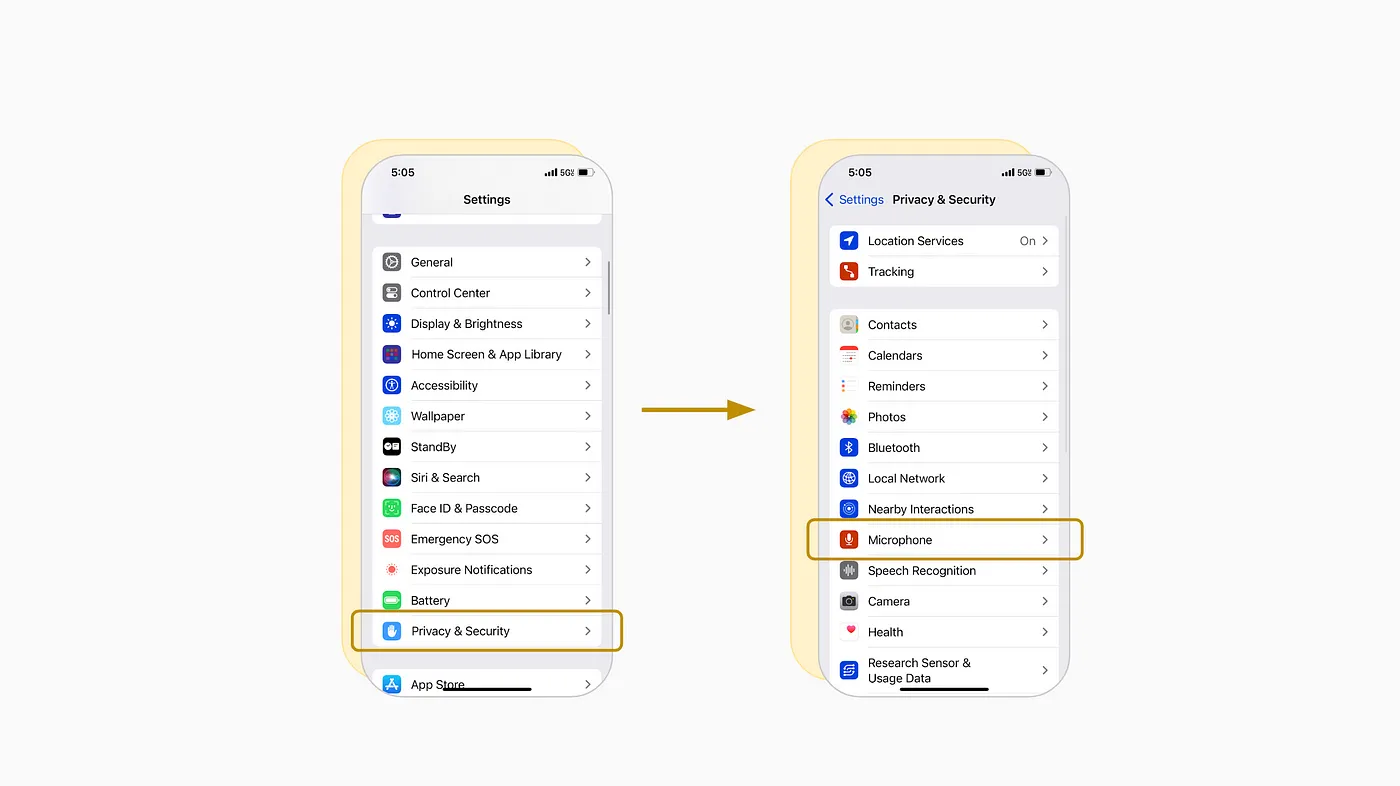

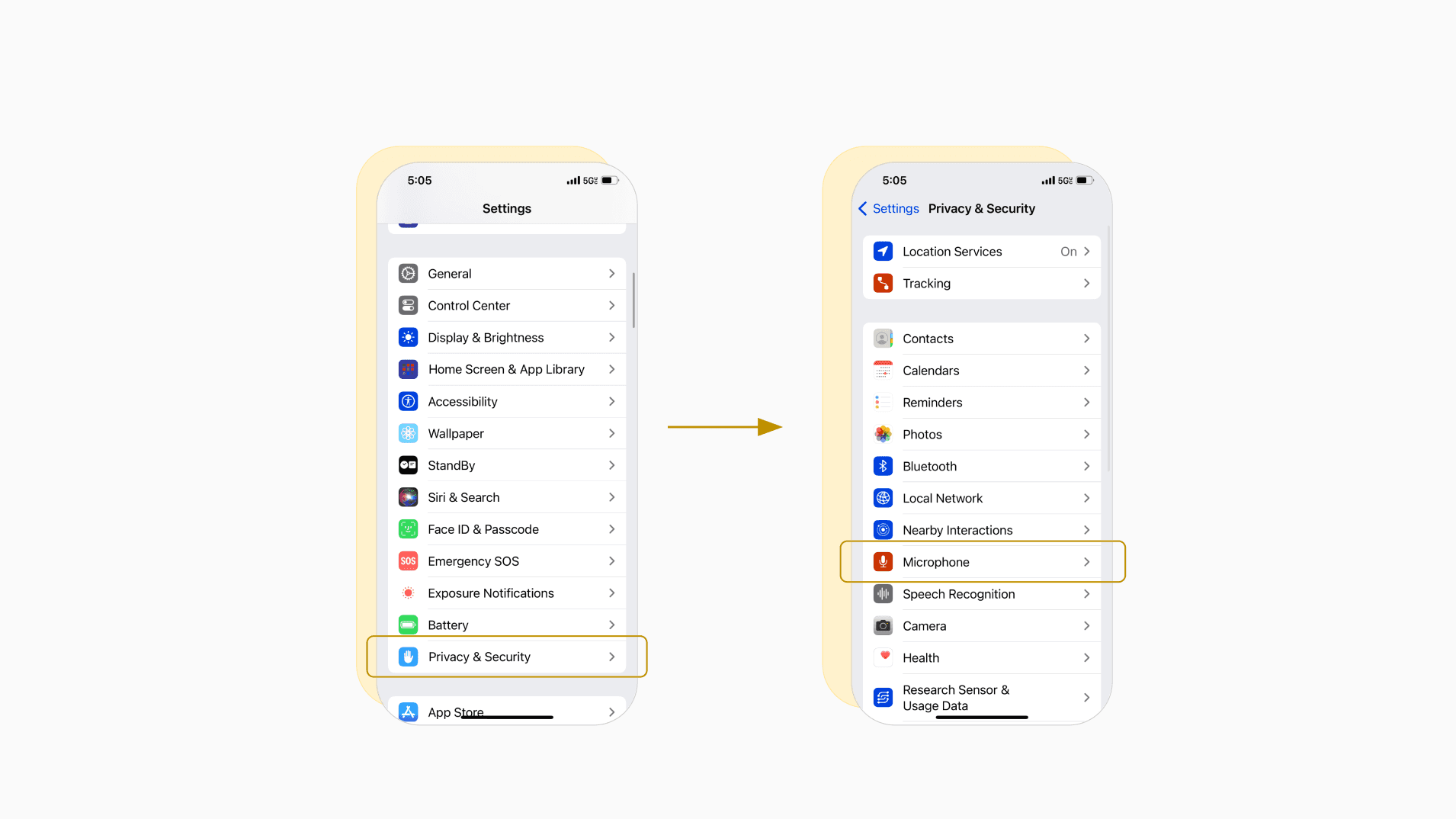

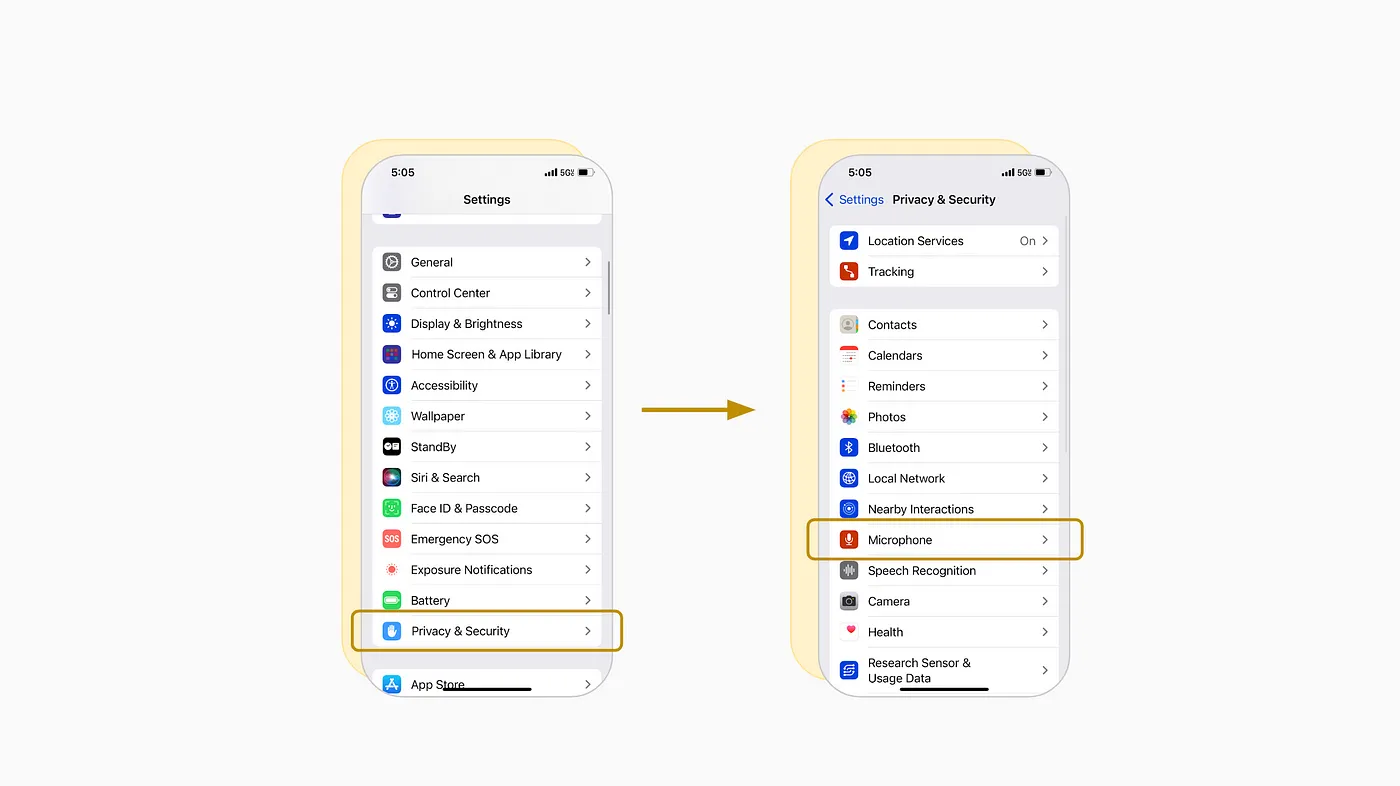

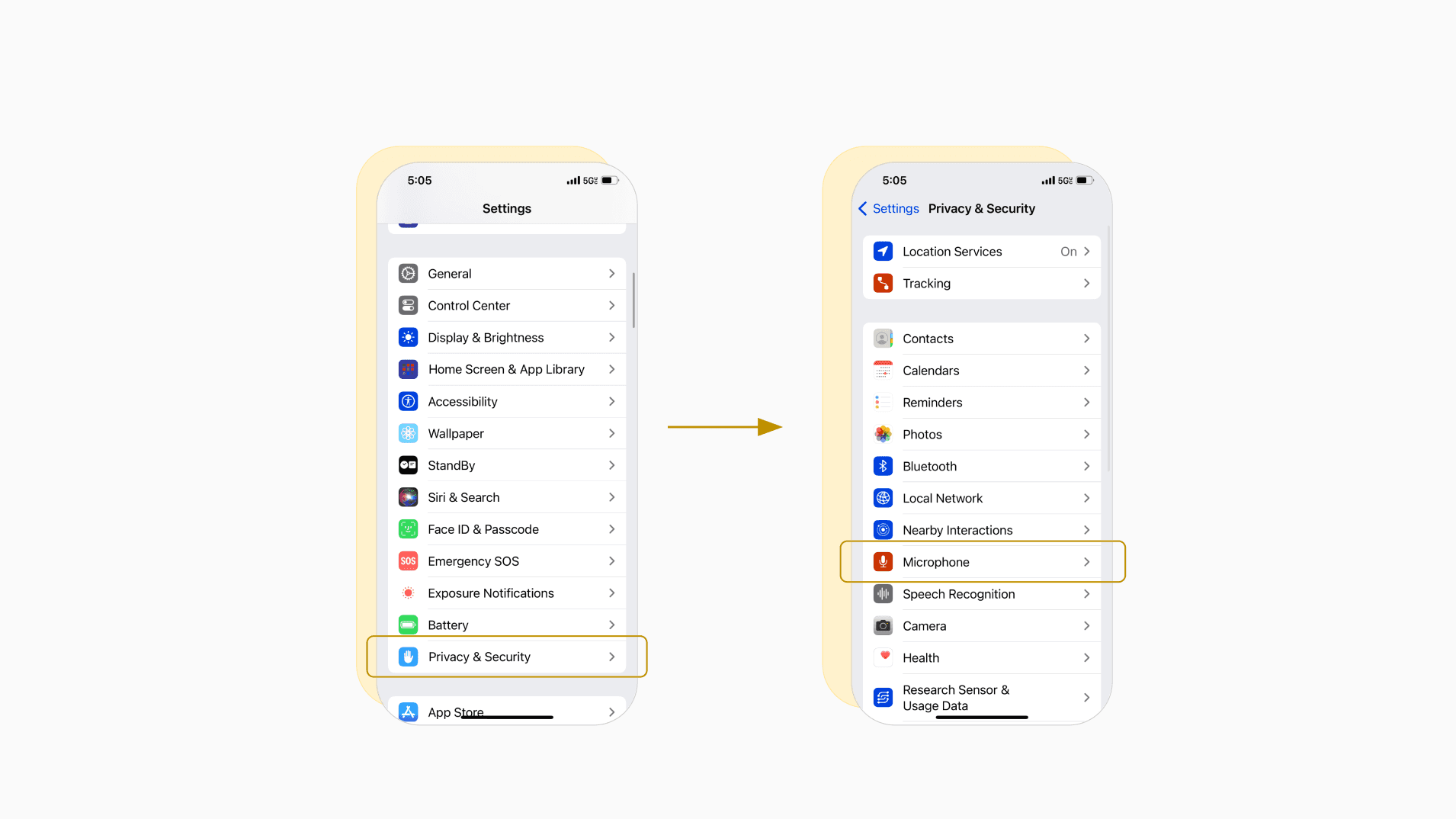

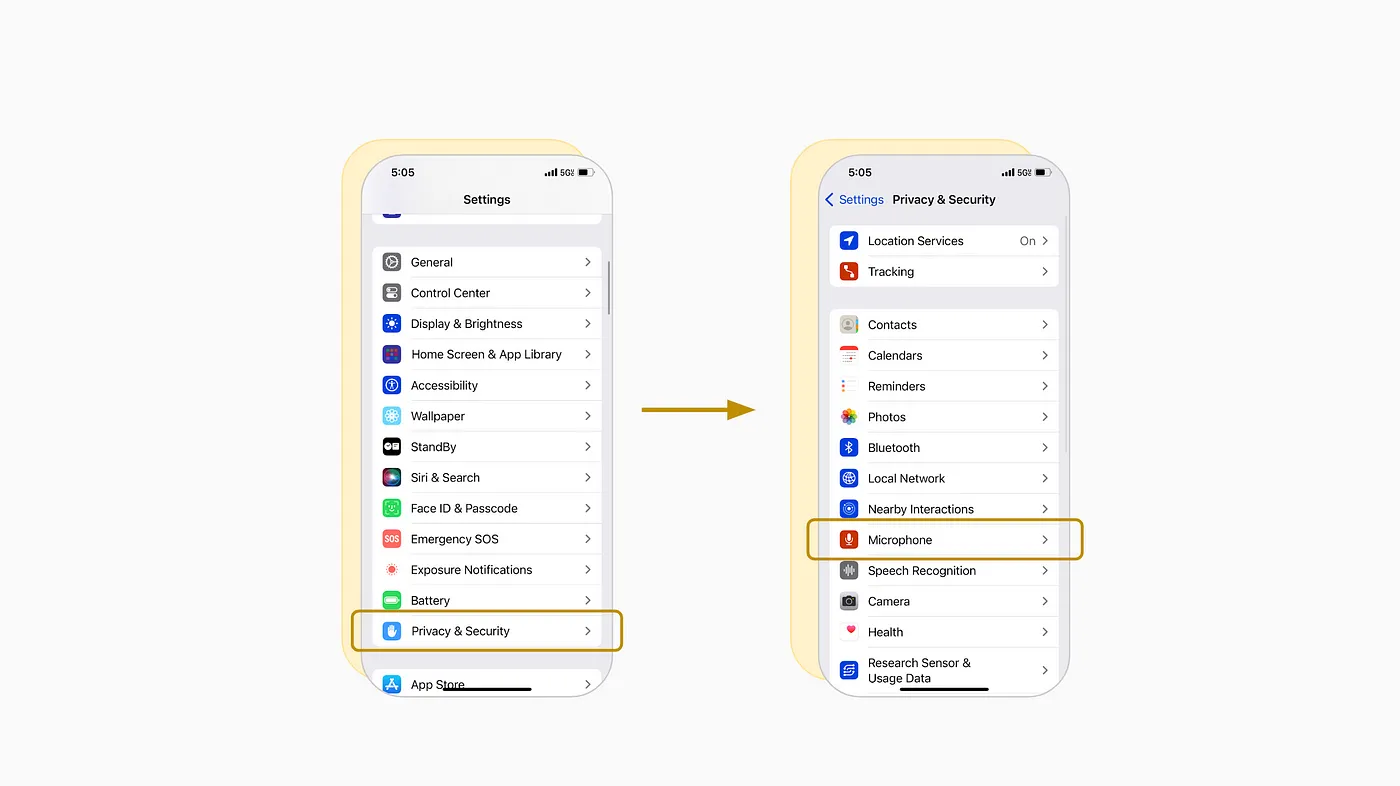

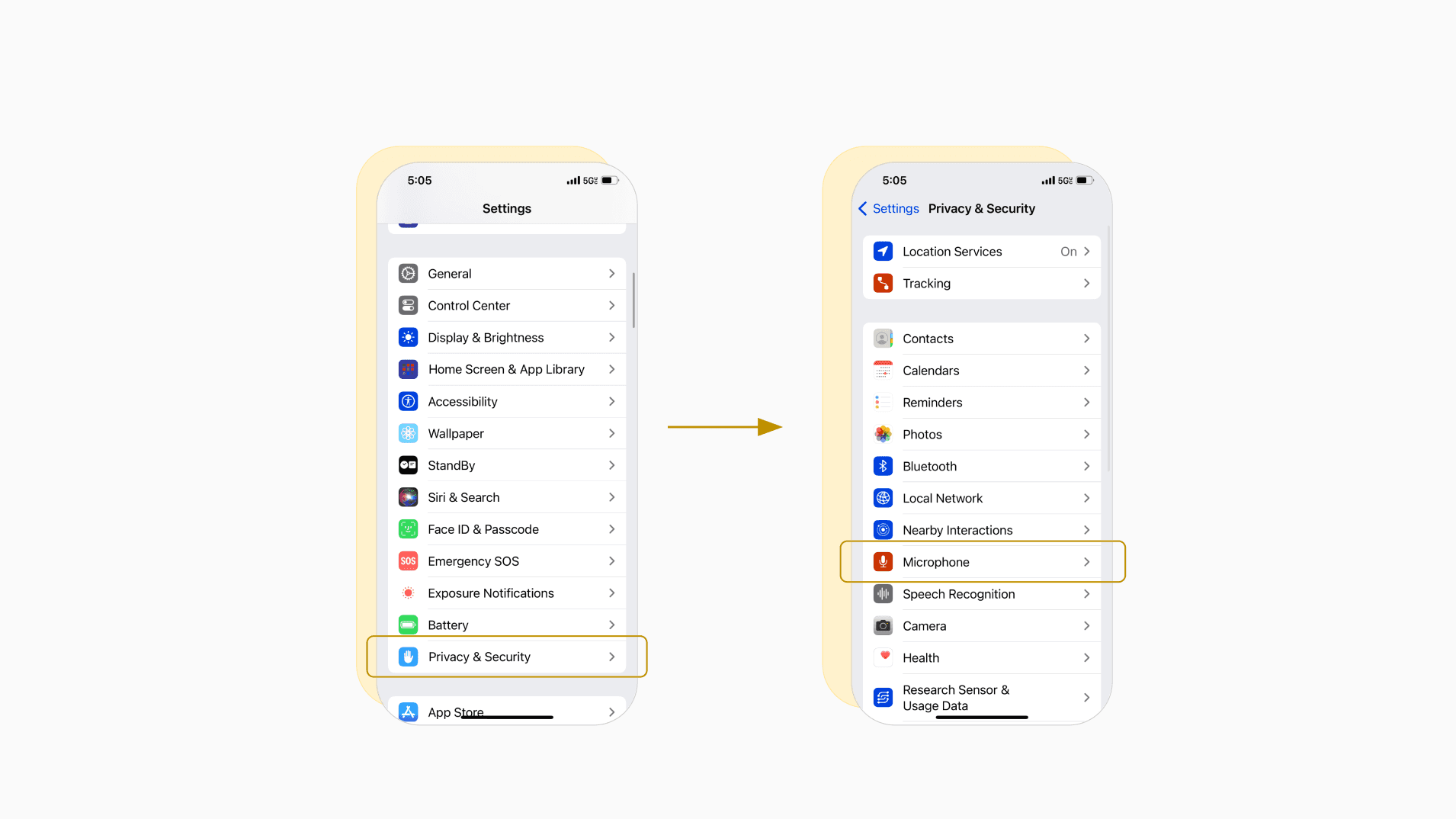

Let’s do a quick experiment: Do you know how many apps have access to your microphone?

Go to your settings, scroll down to ‘Privacy & Security’, then select ‘Microphone’. Quite a list. Wouldn’t you agree?

In an era dominated by digital platforms like YouTube and TikTok, Gen Z users are increasingly confronted with a dilemma: the trade-off between enjoying personalized content recommendations and maintaining their personal data privacy.

Research

My research team and I (as a part of coursework) conducted a study to uncover the nuances of Gen Z’s privacy concerns and their interaction with personalized content systems.

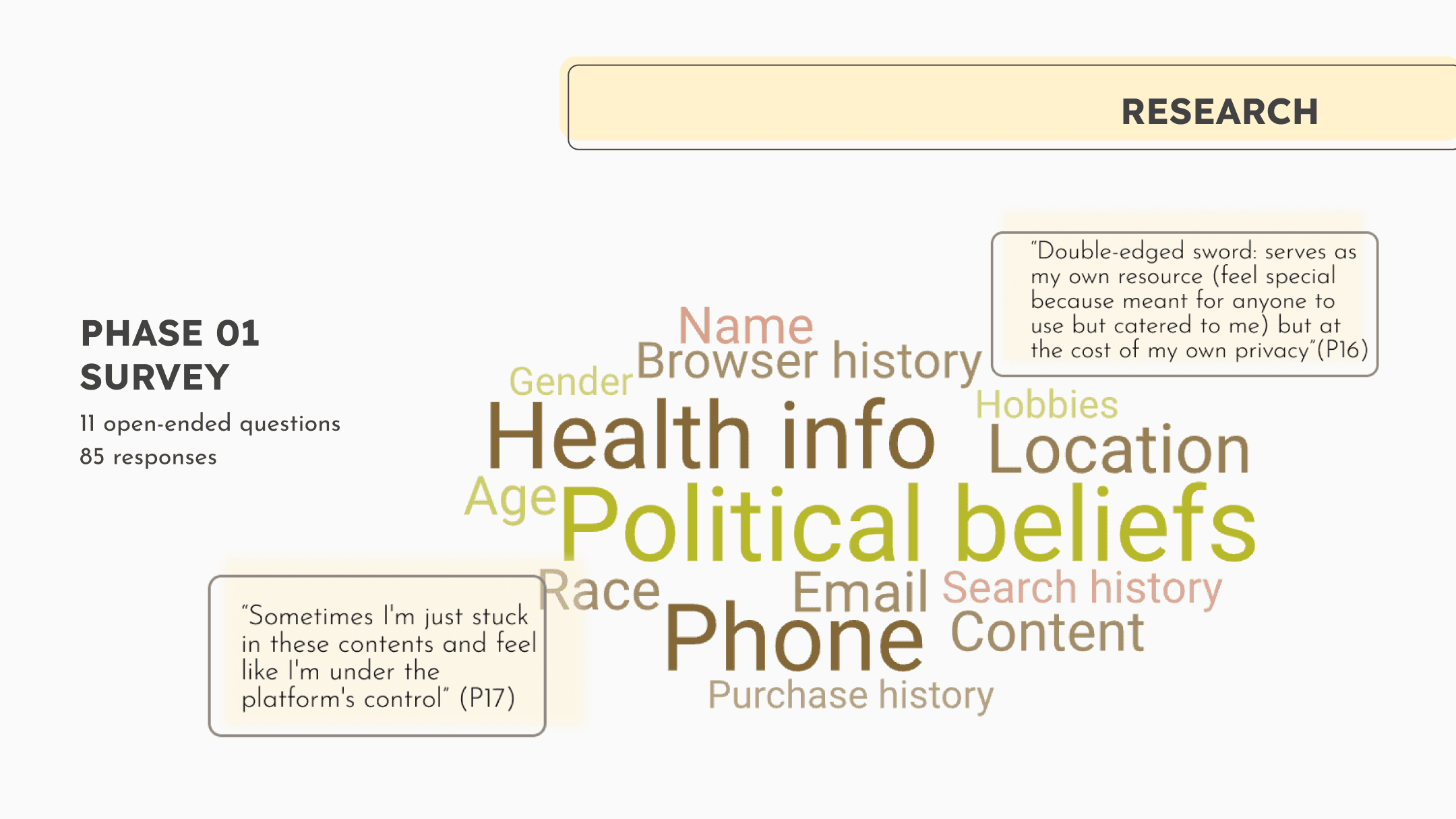

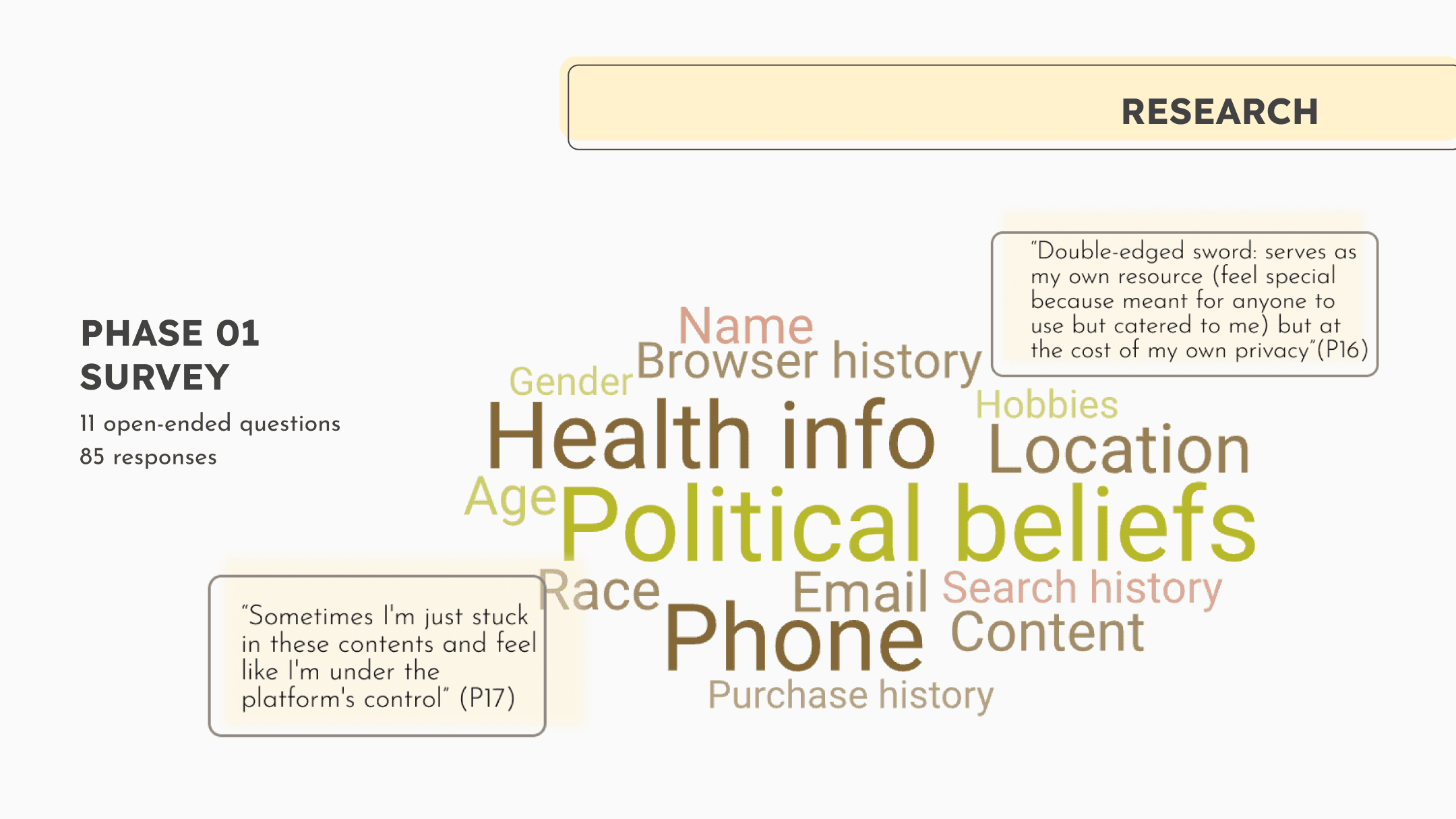

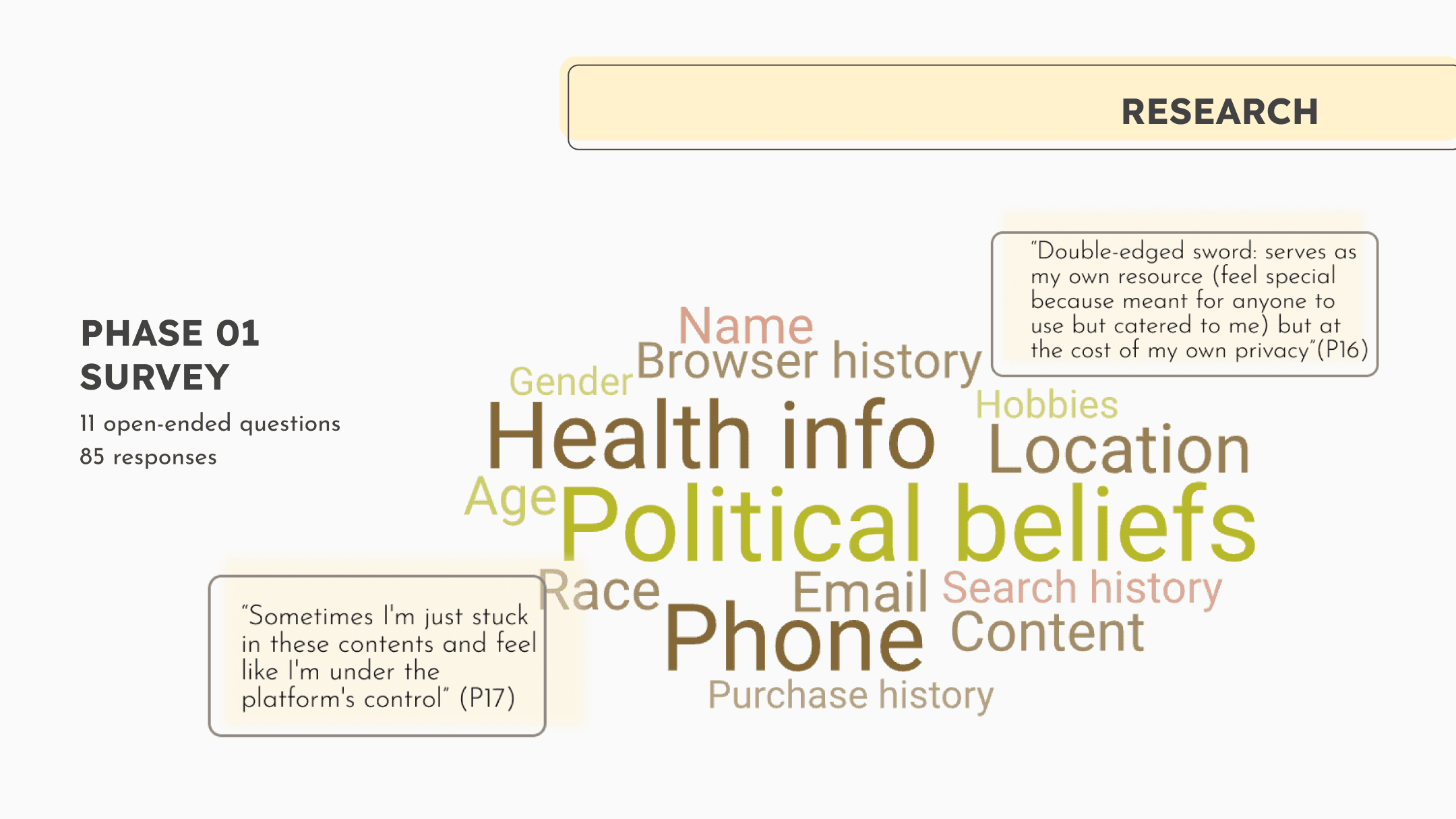

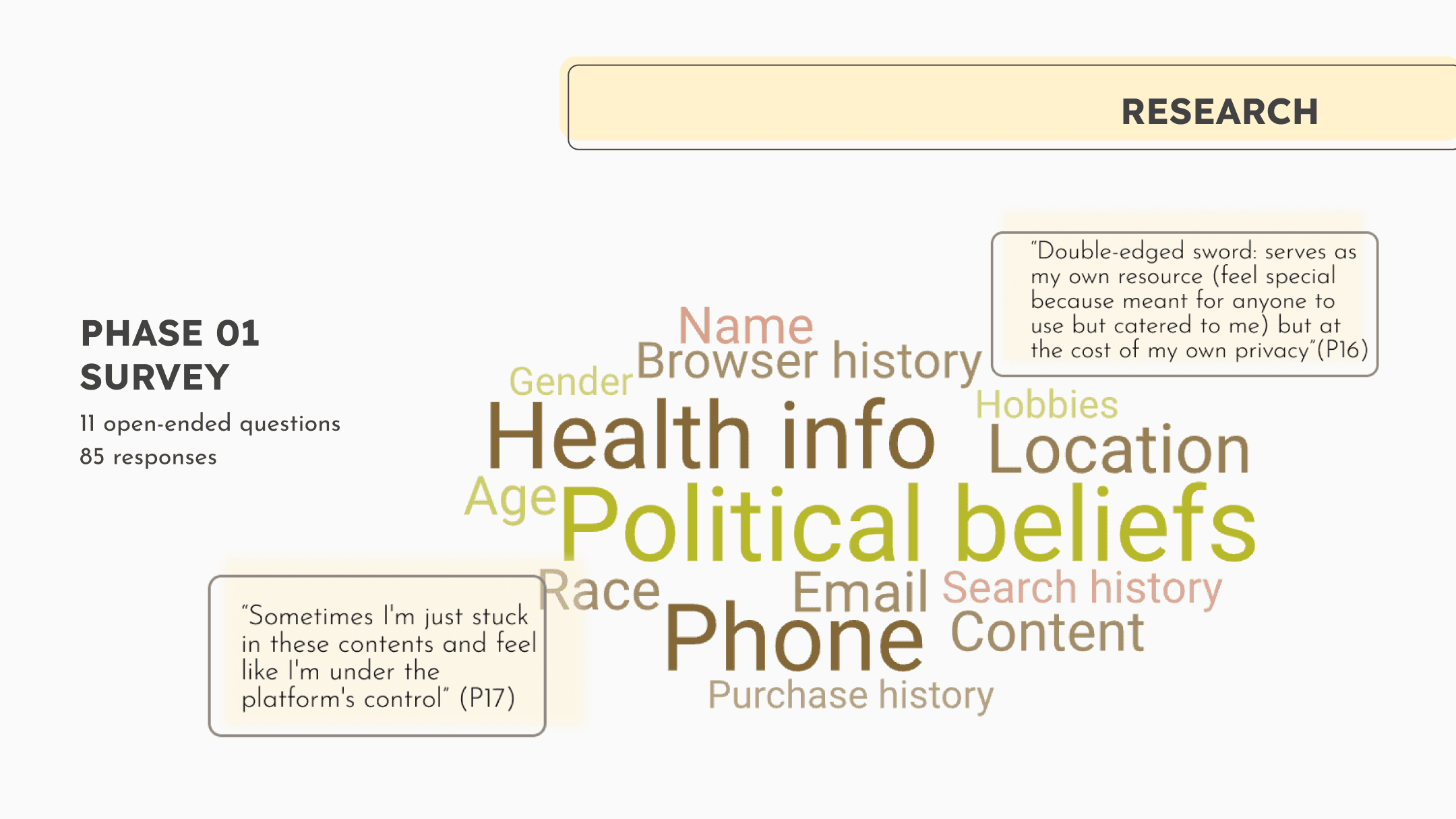

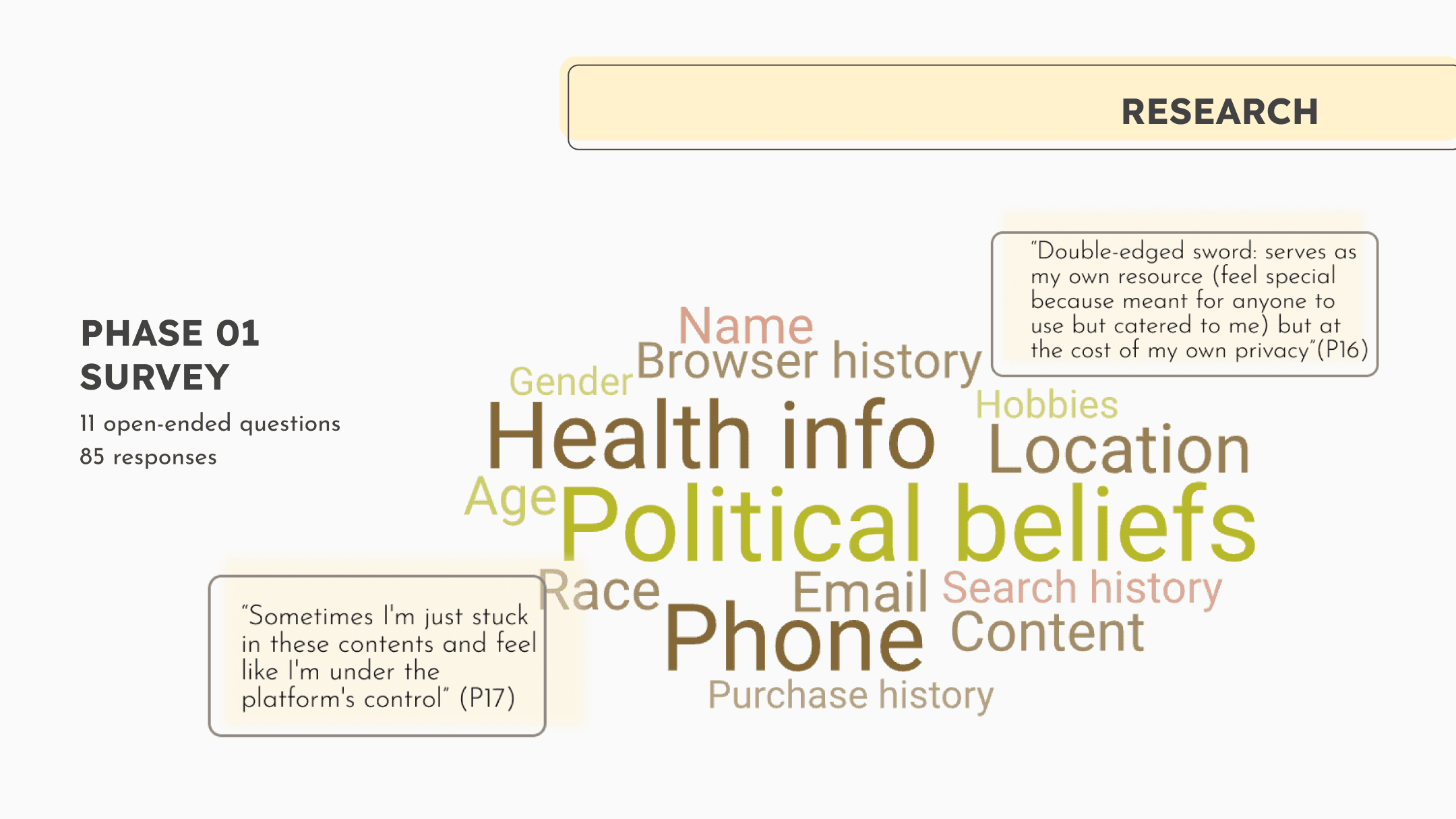

Phase 1

Initiated with a qualitative survey of 85 participants to gather preliminary insights. This phase helped establish a foundational understanding of the key themes and concerns around privacy and personalization.

Phase 2

Involved in-depth user interviews, selected from initial survey respondents. This phase aimed to explore the elements required for users to feel in control when managing their data privacy and content preferences. (To be covered in another article)

We used affinity mapping and qualitative coding to analyze the collected data into meaningful insights.

Results

The phase 1 research illuminated several critical insights, including Gen Z’s need for trust, knowledge, choice, and control regarding their data and content recommendations.

Users are INSECURE about their data

Users are torn; they want the convenience and relevance of personalized recommendations but are concerned about data leaks.

They see personalized content as a double-edge sword — a tool that enhances their digital experience by offering content tailored to their interests but simultaneously exposing them to potential harm.

Users feel TRAPPED by algorithmic recommendations

Users worry that the personalization of content restricts their ability to freely explore diverse information, such as an echo chamber.

They voice frustration over the mandatory sharing of personal data as a prerequisite for accessing online services or even using the device.

Users are scared of MISUSE of data they share

Content platforms lack data sharing controls, which leads to data being shared without the users’ awareness or consent.

They do not feel comfortable with personalized content systems fetching data from various unidentified sources to adjust recommendations.

Users need CONTROL over their data

Users apply various methods like changing passwords, using separate accounts, and requesting removal of personal data to control and protect their privacy.

Participants intentionally modify their feed, to avoid receiving disliked content rather than seeking more favorable content.

Design Recommendations

Based on these findings, we formulated a set of design recommendations for digital platforms:

Enhance User Choice and Relevance: Platforms should allow users to have more say in what data they share, focusing on relevance and non-invasiveness.

Boost Transparency: Information about data usage, privacy policies, and content personalization algorithms should be made more accessible and understandable.

Facilitate Manual Content Adjustments: Users should be empowered to tailor their content feeds more precisely to their preferences.

I hope this article sparked some light on the nuanced perspectives of Gen Z regarding privacy and personalization, shedding light on their concerns and desires for control. By understanding these insights, designers and developers can better align digital platforms with user expectations, ultimately crafting experiences that prioritize privacy while enhancing personalization.

More blog posts

Designing for Privacy in Digital Spaces

Discover how people feel about giving away their personalized data for algorithmic recommendation systems

Posted in

UX Research

Date

May 5, 2024

TLDR;

Personalization Paradox: There is a clear tension between the desire for personalized content and concerns over data security

Agency in Digital Spaces: Users feel the lack of control over their digital experiences

Transparency on Data Usage: Users are concerned about misuse of their data and seek transparency of how the data is utilized

User control: Users yearn for greater control over privacy and recommendation settings

Introduction

Let’s do a quick experiment: Do you know how many apps have access to your microphone?

Go to your settings, scroll down to ‘Privacy & Security’, then select ‘Microphone’. Quite a list. Wouldn’t you agree?

In an era dominated by digital platforms like YouTube and TikTok, Gen Z users are increasingly confronted with a dilemma: the trade-off between enjoying personalized content recommendations and maintaining their personal data privacy.

Research

My research team and I (as a part of coursework) conducted a study to uncover the nuances of Gen Z’s privacy concerns and their interaction with personalized content systems.

Phase 1

Initiated with a qualitative survey of 85 participants to gather preliminary insights. This phase helped establish a foundational understanding of the key themes and concerns around privacy and personalization.

Phase 2

Involved in-depth user interviews, selected from initial survey respondents. This phase aimed to explore the elements required for users to feel in control when managing their data privacy and content preferences. (To be covered in another article)

We used affinity mapping and qualitative coding to analyze the collected data into meaningful insights.

Results

The phase 1 research illuminated several critical insights, including Gen Z’s need for trust, knowledge, choice, and control regarding their data and content recommendations.

Users are INSECURE about their data

Users are torn; they want the convenience and relevance of personalized recommendations but are concerned about data leaks.

They see personalized content as a double-edge sword — a tool that enhances their digital experience by offering content tailored to their interests but simultaneously exposing them to potential harm.

Users feel TRAPPED by algorithmic recommendations

Users worry that the personalization of content restricts their ability to freely explore diverse information, such as an echo chamber.

They voice frustration over the mandatory sharing of personal data as a prerequisite for accessing online services or even using the device.

Users are scared of MISUSE of data they share

Content platforms lack data sharing controls, which leads to data being shared without the users’ awareness or consent.

They do not feel comfortable with personalized content systems fetching data from various unidentified sources to adjust recommendations.

Users need CONTROL over their data

Users apply various methods like changing passwords, using separate accounts, and requesting removal of personal data to control and protect their privacy.

Participants intentionally modify their feed, to avoid receiving disliked content rather than seeking more favorable content.

Design Recommendations

Based on these findings, we formulated a set of design recommendations for digital platforms:

Enhance User Choice and Relevance: Platforms should allow users to have more say in what data they share, focusing on relevance and non-invasiveness.

Boost Transparency: Information about data usage, privacy policies, and content personalization algorithms should be made more accessible and understandable.

Facilitate Manual Content Adjustments: Users should be empowered to tailor their content feeds more precisely to their preferences.

I hope this article sparked some light on the nuanced perspectives of Gen Z regarding privacy and personalization, shedding light on their concerns and desires for control. By understanding these insights, designers and developers can better align digital platforms with user expectations, ultimately crafting experiences that prioritize privacy while enhancing personalization.

More blog posts

Designing for Privacy in Digital Spaces

Discover how people feel about giving away their personalized data for algorithmic recommendation systems

Posted in

UX Research

Date

May 5, 2024

TLDR;

Personalization Paradox: There is a clear tension between the desire for personalized content and concerns over data security

Agency in Digital Spaces: Users feel the lack of control over their digital experiences

Transparency on Data Usage: Users are concerned about misuse of their data and seek transparency of how the data is utilized

User control: Users yearn for greater control over privacy and recommendation settings

Introduction

Let’s do a quick experiment: Do you know how many apps have access to your microphone?

Go to your settings, scroll down to ‘Privacy & Security’, then select ‘Microphone’. Quite a list. Wouldn’t you agree?

In an era dominated by digital platforms like YouTube and TikTok, Gen Z users are increasingly confronted with a dilemma: the trade-off between enjoying personalized content recommendations and maintaining their personal data privacy.

Research

My research team and I (as a part of coursework) conducted a study to uncover the nuances of Gen Z’s privacy concerns and their interaction with personalized content systems.

Phase 1

Initiated with a qualitative survey of 85 participants to gather preliminary insights. This phase helped establish a foundational understanding of the key themes and concerns around privacy and personalization.

Phase 2

Involved in-depth user interviews, selected from initial survey respondents. This phase aimed to explore the elements required for users to feel in control when managing their data privacy and content preferences. (To be covered in another article)

We used affinity mapping and qualitative coding to analyze the collected data into meaningful insights.

Results

The phase 1 research illuminated several critical insights, including Gen Z’s need for trust, knowledge, choice, and control regarding their data and content recommendations.

Users are INSECURE about their data

Users are torn; they want the convenience and relevance of personalized recommendations but are concerned about data leaks.

They see personalized content as a double-edge sword — a tool that enhances their digital experience by offering content tailored to their interests but simultaneously exposing them to potential harm.

Users feel TRAPPED by algorithmic recommendations

Users worry that the personalization of content restricts their ability to freely explore diverse information, such as an echo chamber.

They voice frustration over the mandatory sharing of personal data as a prerequisite for accessing online services or even using the device.

Users are scared of MISUSE of data they share

Content platforms lack data sharing controls, which leads to data being shared without the users’ awareness or consent.

They do not feel comfortable with personalized content systems fetching data from various unidentified sources to adjust recommendations.

Users need CONTROL over their data

Users apply various methods like changing passwords, using separate accounts, and requesting removal of personal data to control and protect their privacy.

Participants intentionally modify their feed, to avoid receiving disliked content rather than seeking more favorable content.

Design Recommendations

Based on these findings, we formulated a set of design recommendations for digital platforms:

Enhance User Choice and Relevance: Platforms should allow users to have more say in what data they share, focusing on relevance and non-invasiveness.

Boost Transparency: Information about data usage, privacy policies, and content personalization algorithms should be made more accessible and understandable.

Facilitate Manual Content Adjustments: Users should be empowered to tailor their content feeds more precisely to their preferences.

I hope this article sparked some light on the nuanced perspectives of Gen Z regarding privacy and personalization, shedding light on their concerns and desires for control. By understanding these insights, designers and developers can better align digital platforms with user expectations, ultimately crafting experiences that prioritize privacy while enhancing personalization.

More blog posts

Designing for Privacy in Digital Spaces

Discover how people feel about giving away their personalized data for algorithmic recommendation systems

Posted in

UX Research

Date

May 5, 2024

TLDR;

Personalization Paradox: There is a clear tension between the desire for personalized content and concerns over data security

Agency in Digital Spaces: Users feel the lack of control over their digital experiences

Transparency on Data Usage: Users are concerned about misuse of their data and seek transparency of how the data is utilized

User control: Users yearn for greater control over privacy and recommendation settings

Introduction

Let’s do a quick experiment: Do you know how many apps have access to your microphone?

Go to your settings, scroll down to ‘Privacy & Security’, then select ‘Microphone’. Quite a list. Wouldn’t you agree?

In an era dominated by digital platforms like YouTube and TikTok, Gen Z users are increasingly confronted with a dilemma: the trade-off between enjoying personalized content recommendations and maintaining their personal data privacy.

Research

My research team and I (as a part of coursework) conducted a study to uncover the nuances of Gen Z’s privacy concerns and their interaction with personalized content systems.

Phase 1

Initiated with a qualitative survey of 85 participants to gather preliminary insights. This phase helped establish a foundational understanding of the key themes and concerns around privacy and personalization.

Phase 2

Involved in-depth user interviews, selected from initial survey respondents. This phase aimed to explore the elements required for users to feel in control when managing their data privacy and content preferences. (To be covered in another article)

We used affinity mapping and qualitative coding to analyze the collected data into meaningful insights.

Results

The phase 1 research illuminated several critical insights, including Gen Z’s need for trust, knowledge, choice, and control regarding their data and content recommendations.

Users are INSECURE about their data

Users are torn; they want the convenience and relevance of personalized recommendations but are concerned about data leaks.

They see personalized content as a double-edge sword — a tool that enhances their digital experience by offering content tailored to their interests but simultaneously exposing them to potential harm.

Users feel TRAPPED by algorithmic recommendations

Users worry that the personalization of content restricts their ability to freely explore diverse information, such as an echo chamber.

They voice frustration over the mandatory sharing of personal data as a prerequisite for accessing online services or even using the device.

Users are scared of MISUSE of data they share

Content platforms lack data sharing controls, which leads to data being shared without the users’ awareness or consent.

They do not feel comfortable with personalized content systems fetching data from various unidentified sources to adjust recommendations.

Users need CONTROL over their data

Users apply various methods like changing passwords, using separate accounts, and requesting removal of personal data to control and protect their privacy.

Participants intentionally modify their feed, to avoid receiving disliked content rather than seeking more favorable content.

Design Recommendations

Based on these findings, we formulated a set of design recommendations for digital platforms:

Enhance User Choice and Relevance: Platforms should allow users to have more say in what data they share, focusing on relevance and non-invasiveness.

Boost Transparency: Information about data usage, privacy policies, and content personalization algorithms should be made more accessible and understandable.

Facilitate Manual Content Adjustments: Users should be empowered to tailor their content feeds more precisely to their preferences.

I hope this article sparked some light on the nuanced perspectives of Gen Z regarding privacy and personalization, shedding light on their concerns and desires for control. By understanding these insights, designers and developers can better align digital platforms with user expectations, ultimately crafting experiences that prioritize privacy while enhancing personalization.

More blog posts

Designing for Privacy in Digital Spaces

Discover how people feel about giving away their personalized data for algorithmic recommendation systems

Posted in

UX Research

Date

May 5, 2024

TLDR;

Personalization Paradox: There is a clear tension between the desire for personalized content and concerns over data security

Agency in Digital Spaces: Users feel the lack of control over their digital experiences

Transparency on Data Usage: Users are concerned about misuse of their data and seek transparency of how the data is utilized

User control: Users yearn for greater control over privacy and recommendation settings

Introduction

Let’s do a quick experiment: Do you know how many apps have access to your microphone?

Go to your settings, scroll down to ‘Privacy & Security’, then select ‘Microphone’. Quite a list. Wouldn’t you agree?

In an era dominated by digital platforms like YouTube and TikTok, Gen Z users are increasingly confronted with a dilemma: the trade-off between enjoying personalized content recommendations and maintaining their personal data privacy.

Research

My research team and I (as a part of coursework) conducted a study to uncover the nuances of Gen Z’s privacy concerns and their interaction with personalized content systems.

Phase 1

Initiated with a qualitative survey of 85 participants to gather preliminary insights. This phase helped establish a foundational understanding of the key themes and concerns around privacy and personalization.

Phase 2

Involved in-depth user interviews, selected from initial survey respondents. This phase aimed to explore the elements required for users to feel in control when managing their data privacy and content preferences. (To be covered in another article)

We used affinity mapping and qualitative coding to analyze the collected data into meaningful insights.

Results

The phase 1 research illuminated several critical insights, including Gen Z’s need for trust, knowledge, choice, and control regarding their data and content recommendations.

Users are INSECURE about their data

Users are torn; they want the convenience and relevance of personalized recommendations but are concerned about data leaks.

They see personalized content as a double-edge sword — a tool that enhances their digital experience by offering content tailored to their interests but simultaneously exposing them to potential harm.

Users feel TRAPPED by algorithmic recommendations

Users worry that the personalization of content restricts their ability to freely explore diverse information, such as an echo chamber.

They voice frustration over the mandatory sharing of personal data as a prerequisite for accessing online services or even using the device.

Users are scared of MISUSE of data they share

Content platforms lack data sharing controls, which leads to data being shared without the users’ awareness or consent.

They do not feel comfortable with personalized content systems fetching data from various unidentified sources to adjust recommendations.

Users need CONTROL over their data

Users apply various methods like changing passwords, using separate accounts, and requesting removal of personal data to control and protect their privacy.

Participants intentionally modify their feed, to avoid receiving disliked content rather than seeking more favorable content.

Design Recommendations

Based on these findings, we formulated a set of design recommendations for digital platforms:

Enhance User Choice and Relevance: Platforms should allow users to have more say in what data they share, focusing on relevance and non-invasiveness.

Boost Transparency: Information about data usage, privacy policies, and content personalization algorithms should be made more accessible and understandable.

Facilitate Manual Content Adjustments: Users should be empowered to tailor their content feeds more precisely to their preferences.

I hope this article sparked some light on the nuanced perspectives of Gen Z regarding privacy and personalization, shedding light on their concerns and desires for control. By understanding these insights, designers and developers can better align digital platforms with user expectations, ultimately crafting experiences that prioritize privacy while enhancing personalization.